Explainable AI is Responsible AI

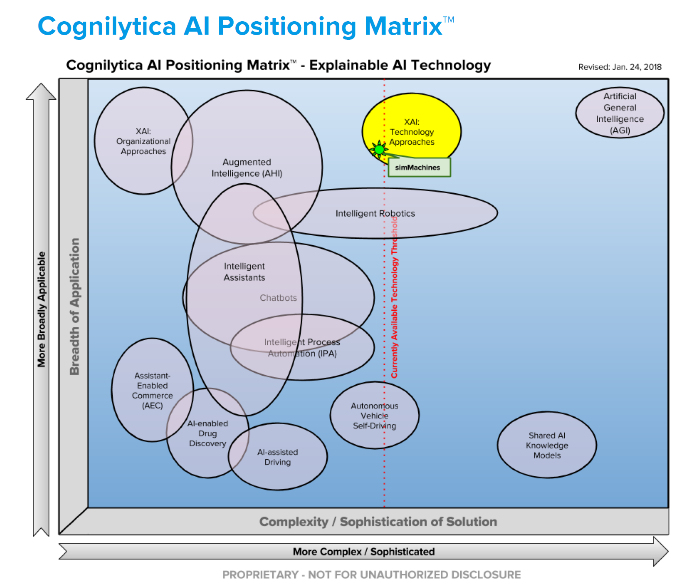

In Cognilytica Research’s briefing note on simMachines, January, 2018, it highlights the black box challenge of today’s AI machine learning technologies and the fundamental problems lack of explainability causes. Business users need to understand what machine learning algorithms are deciding and why on an ongoing basis for ethical, legal and business reasons. Increasingly this is recognized as a primary challenge globally by major firms, as AI adoption accelerates.

“The more we involve AI in our daily lives, the more we need to be able to trust the decisions that autonomous systems make. However, it’s becoming harder and harder to understand how these systems arrive at their decisions. There are many different supervised and unsupervised ways of training AI systems with Deep Learning and Machine Learning, and these systems are guided with approaches in which a human or the system itself determines what is correct and incorrect. But once this system is operating, the human supervisors are no longer there, and so we don’t know how the “black box” of the AI system is truly operating.”

The report asks, “but what exactly do we want AI systems to explain? In general, AI implementers and users want the AI systems to answer these questions which we don’t currently have answers to:

- Why did the AI system do that?

- Why didn’t the AI system do something else?

- When did the AI system succeed?

- When did the AI system fail?

- When does the AI system give enough confidence in the decision that you can trust it?

- How can the AI system correct an error?

“Cognilytica believes that XAI is an absolutely necessary part of making AI work practically in real-world business and mission-critical situations. Without it, AI will be used for either trivial activities or not at all.”

Once you have these factors, by prediction, you can execute real time interactions with tremendous insight into the context of each one, as the prediction factors reveal all of the key characteristics associated with the prediction by data element or factor.

Once you have these factors, by prediction, you can execute real time interactions with tremendous insight into the context of each one, as the prediction factors reveal all of the key characteristics associated with the prediction by data element or factor.

Why one customer is calling to cancel service vs. another, or make a purchase vs. another, can be driven by completely different factors. Therefore, it is now possible to treat each individual interaction, driven by a similarity based machine learning prediction differently, by leveraging the weighted factors to drive the selection of one offer and treatment vs. another.

Similarity creates uniquely valuable outputs and handles certain applications that other alternative machine learning methods can’t. Similarity reveals what’s behind the mind of the machine to humans so humans can decide what actions to take. It provides powerful insights and capabilities that, through transparency, engender trust and understanding.

simMachines technology is purpose built to solve the black box problem as the report outlines. Our proprietary similarity-based machine learning method was developed by our founder, Arnoldo Mueller, Ph.D. in order to answer these very questions. Arnoldo began this quest over ten years ago to ensure that business users would be able to easily “see into the mind of the machine” to understand, for decision making purposes, why the machine was making the predictions it was making.

Explainable AI Software

Today, simMachines technology is deployed in large scale applications, providing predictions with “the Why” at a local prediction level. Similarity based machine learning is the only method that can pull through detailed sets of dynamically generated factors for every prediction, and therefore offers a range of unique applications based on the resulting insights. The software routinely processes, on a single machine, 1.6M predictions per second, and real-time predictions in ~ 8 – 20 milliseconds. simMachines can match or outperform any other machine learning method in prediction accuracy while providing an explanation for every prediction. simMachines Explainable AI can also provide the Why behind other algorithms by “mirroring” their predictions. As a result, simMachines offers an enterprise capability to explain algorithms already in place that business users are happy with in terms of performance, but require the explanation.

In conclusion, Cognilytica Research states that “enterprise, public and private sector organizations exploring the need to adopt AI and ML in a transparent and explainable way should consider the simMachines offering as a component of their solution.” We couldn’t agree more!